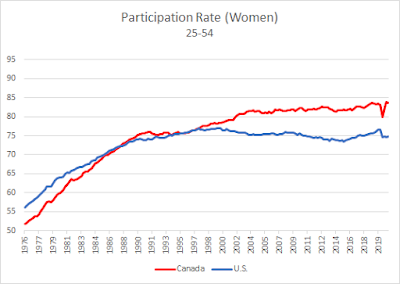

I've been thinking a bit lately about theories of the business cycle (a lot of time for reflection in these days of COVID-19). At least, the way some of these theories have evolved over my lifetime and from the perspective of my own training in the field. From my (admittedly narrow) perspective as a researcher and advisor at a central bank, the journey beginning c. 1960 seems like it's taken the following steps: (1) Phillips Curve and some Natural Rate Hypothesis; (2) Real Business Cycle (RBC) theory; (3) New Keynesian theory. It seems like we might be ready to take the next step. I'll offer some thoughts on this at the end, for whatever they're worth.

There's no easy way to summarize the state of macroeconomic thinking, of course. But it seems clear that, at any given time, some voices and ways of thinking are more dominant than others. By the time the 1960s rolled around, there seemed to be a consensus that monetary and fiscal policy should be used to stabilize the business cycle. The main issue, in this regard, revolved over which set of instruments was better suited for the job. (See, for example, this classic debate between Milton Friedman and Walter Heller).

Central to macroeconomic thinking at the time was a concept called the Phillips Curve (PC). There is a subtle, but important, distinction to make here between the PC as a statistical correlation and the PC as a

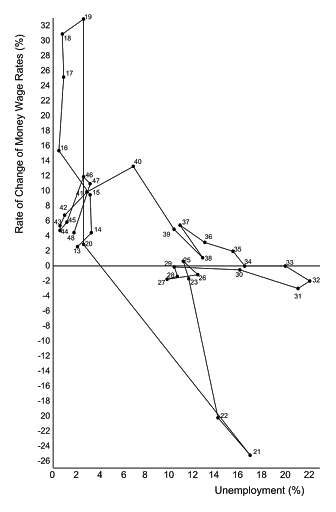

theory of that statistical relationship. In 1958, Phillips noticed an interesting pattern in the data: nominal wage growth seemed negatively correlated with the unemployment rate in the U.K. over the period 1913-48 (see diagram to the right). How to interpret this correlation? One theory is that when the unemployment rate is high, workers are easy to find and their bargaining position is weak, leading to small nominal wage gains. Conversely, when unemployment is low, available workers are scarce and their bargaining position is strong, leading to large nominal wage gains.

Then, in 1960, Paul Samuelson and Robert Solow wrote their classic piece "

Problem of Achieving and Maintaining a Stable Price-Level: Analytical Aspects of Anti-Inflation Policy." Then, as is the case still now, the authors lamented the lack of consensus on a theory of price inflation. Various cost-push and demand-pull hypotheses were reviewed, problems of identification noted, and calls for micro-data to help settle the issue were made. They also mentioned Phillips' article and noted how the same diagram for the U.S. looked like a shot-gun blast (little correlation, except for some sub-samples). Then they translated the Phillips curve using price inflation instead of wage inflation. No data was sacrificed in this exercise; their "theory" was summarized with the diagram to the left.

I put "theory" in quotes in the passage above because the theory (explanation) was never clear to me. In particular, while I could see how an increase in the rate of unemployment might depress the level wage, I could not grasp how it could influence the rate of growth of wages for any prolonged period of time. This logical inconsistency was solved by the Phelps-Friedman natural rate hypothesis; see Farmer (2013) for a summary and critique.

The TL;DR version of this hypothesis is that the PC is negatively sloped only in the short-run, but vertical in the long-run. So, while monetary policy (increasing the rate of inflation) could lower the unemployment rate below its natural rate, it could only do so temporarily. Eventually, the unemployment rate would move back to its natural rate at the higher rate of inflation. This hypothesis seemed to provide a compelling interpretation of the stagflation (high inflation and high unemployment) experienced in the 1970s. It also seemed to explain the success of Volcker's disinflation policy in the 1980s. Nevertheless, uneasiness in the state of the theory remained and a new (well, nothing is ever completely new) way of theorizing was on the horizon.

By the time I got to grad school in the late 1980s, "real business cycle theory" was in vogue; see Charles Plosser's summary here and Bob King's lecture notes here.

There was a lot going on with this program. A central thesis of RBC theory is that the phenomena of economic growth and business cycles are inextricably linked. This is, of course, is an old idea in economics going back at least to Dennis Robertson (see this review by Charles Goodhart) and explored extensively by a number of Austrian economists, like Joseph Schumpeter.

The idea that "the business cycle" is to some extent a byproduct of the process of economic development is an attractive hypothesis. Economic growth is driven by technological innovation and diffusion, and perhaps regulatory policies. There is no a priori reason to expect these "real" processes to evolve in a "smooth" manner. In fact, these changes appear to arrive randomly and with little or no mean-reverting properties. It would truly be a marvel if the business cycle did not exist.

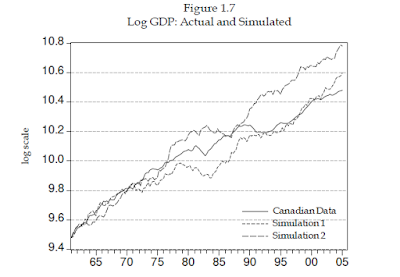

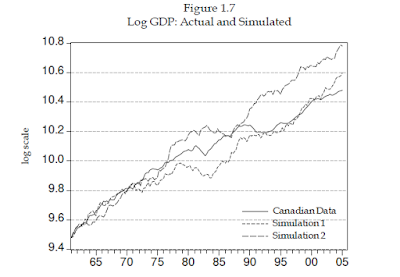

The notion of "no mean-reverting properties" is important. It basically means that technology/policy shocks are largely permanent (or at least, highly persistent). If macroeconomic variables like the GDP inherit this property, then a "cycle"--the tendency for a variable to return to some long-run trend--does not even exist (and if you think you see it, it's only a figment of your imagination). For this reason, early proponents of RBC theory preferred the label "fluctuations" over "cycle." This view was supported by the fact that econometricians had a hard time rejecting the hypothesis that the real GDP followed a random walk (with drift). For example, here is Canadian GDP plotted against two realizations of a random walk with drift:

This perspective fermented at a time when the cost of computing power was falling dramatically. This permitted economists to study models that were too complicated to analyze with conventional "pencil and paper" methods. Inspiration was provided by Lucas (1980), who wrote:

Our task, as I see it…is to write a FORTRAN program

that will accept specific economic policy rules as ‘input’ and will generate as

‘output’ statistics describing the operating characteristics of time series we

care about, which are predicted to result from these policies.”

And so that's what people did. But what sort of statistics were model economies supposed to reproduce? Once again, it was Lucas (1976) who provided the needed guidance. The empirical business cycle regularities emphasized by Lucas were "co-movements" between different aggregate time-series. Employment, for example, is "pro-cyclical" (tends to move in the same direction as GDP) around "trend." These types of regularities can be captured by statistics like correlations. But these correlations (and standard deviations) only make sense for stationary time-series, and the data is mostly non-stationary. So, what to do?

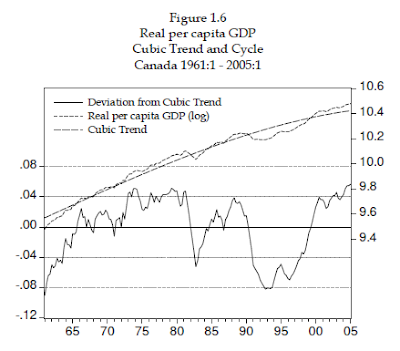

Transforming the data through first-differencing (i.e., looking at growth rates instead of levels) is one way to render (much of) the data stationary. Another approach was made popular by Prescott (1986), who advocated a method that most people employ: draw a smooth line through the data, label it "trend," and then examine the behavior of "deviations from trend." Something like this,

It's important to note that Prescott viewed the trend line in the figure above as "statistical trend," not an "economic trend." To him, there was no deterministic trend, since the data was being generated by a random walk (so, the actual trend is stochastic). Nevertheless, drawing a smooth trend line was a useful way to render the data stationary. The idea was to apply the same de-trending procedure to actual data and simulated data, and then compare statistical properties across model and data.

The point of mentioning this is that no one involved in this program was conditioned to interpret the economy as "overheating" or in "depression." Growing economies exhibited fluctuations--sometimes big and persistent fluctuations. The question was how much of these observed fluctuations could be attributed purely to the process of economic development (technological change), without reference to monetary or financial factors? I think it's fair to say that the answer turned out to be "not much, at least, not at business cycle frequencies." The important action seemed to occur at lower frequencies. Lucas (1988) once again provided the lead when he remarked "Once one starts to think about growth, it is hard to think about anything else." And so, the narrow RBC approach turned its attention to low-frequency dynamics; e.g., see my interview with Lee Ohanian here.

Of course, many economists never bought into the idea that monetary and financial factors were unimportant for understanding business cycles. Allen and Gale, for example, schooled us on financial fragility; see here. But this branch of the literature never really made much headway in mainstream macro, at least, not before 2008. Financial crises were something that happened in history, or in other parts of the world. Instead, macroeconomists looked back on its roots in the 1960s and embedded a version of the PC into an RBC model to produce what is now known as the New Keynesian framework. Short-run money non-neutrality was achieved by assuming that nominal price-setting behavior was subject to frictions, rendering nominal prices "sticky." In this environment, shocks to the economy are not absorbed efficiently, at least, not in the absence of an appropriate monetary policy. And so, drawing inspiration from John Taylor and Michael Woodford, the framework added an interest rate policy rule now known as the Taylor rule. Today, the basic NK model consists of these three core elements:

[1] An IS curve: Relates aggregate demand to the real interest rate and shocks.

[2] An Phillips Curve: Relates the rate of inflation (around trend) to the output gap.

[3] A Taylor Rule: Describes how interest rate policy reacts to output and inflation gaps.

I have to be honest with you. I never took a liking to NK model. I'm more of an Old Keynesian, similar to

Roger Farmer (we share the

same supervisor, so perhaps this is no accident). In any case, the NK framework became (and continues to be) a core thought-organizing principle for central bank economists around the world. It has become a sort of

lingua franca in academic macro circles. And if you don't know how to speak its language, you're going to have a hard time communicating with the orthodoxy.

Of the three basic elements of the NK model, I think the NK Phillips Curve (which embeds the natural rate hypothesis) has resulted in the most mischief; at least, from the perspective of advising the conduct of monetary policy. The concept is firmly embedded in the minds of many macroeconomists and policymakers. Consider, for example, Greg Mankiw's recent piece "Yes, There is a Trade-Off Between Inflation and Unemployment."

Today, most economists believe there is a trade-off between inflation and unemployment in the sense that actions taken by a central bank push these variables in opposite directions. As a corollary, they also believe there must be a minimum level of unemployment that the economy can sustain without inflation rising too high. But for various reasons, that level fluctuates and is difficult to determine.

The Fed’s job is to balance the competing risks of rising unemployment and rising inflation. Striking just the right balance is never easy. The first step, however, is to recognize that the Phillips curve is always out there lurking.

The Phillips curve is always lurking. The message for a central banker is "sure, inflation and unemployment may be low for now, but if we keep monetary policy where it is and permit the unemployment rate to fall further, we will risk higher inflation in the future." I'm not sure if economists who write in this manner are aware that they're making it sound like workers are somehow responsible for inflation. Central banker to workers: "I'm sorry, but we need to keep some of you unemployed...it's the inflation, you see."

There is evidence that this line of thinking influenced the FOMC in 2015 in its decision to "lift off" and return the policy rate to some historically normal level; see my post here explaining the pros and cons in the lift-off debate. By the start of 2014, there was considerable pressure on the Fed to begin "normalizing" its policy rate. By mid 2014, the expectation of "lift off" likely contributed to significant USD appreciation and the economic weakness that followed. If I recall correctly, Vice Chair Stan Fischer started off the year by announcing that four rate hikes for 2015 were in order (as it turned out, the Fed only raised rates once--in December). To some observers, this all seemed very strange. After all, the unemployment rate was still above its estimated "natural" rate (5%) and inflation continued to undershoot its 2% target. What was going on?

What was going on was the Phillips curve. Here is Chair Yellen at the March 17-18, 2015 FOMC meeting (transcript available here):

If we adopt alternative B, one criterion for an initial tightening is that we need to be reasonably confident that inflation will move back to 2 percent over the medium term. For the remainder of this year, my guess is that it will be hard to point to data demonstrating that inflation is actually moving up toward our objective. Measured on a 12-month basis, both core and headline inflation will very likely be running below 1½ percent all year. That means that if we decide to start tightening later this year, a development that I think is likely, we will have to justify our inflation forecasts using indirect evidence, historical experience, and economic theory.

The argument from history and economic theory seems straightforward. Experience here and abroad teaches us that, as resource utilization tightens, eventually inflation will begin to rise. To me, this seems like a simple matter of demand and supply. So the more labor and product markets tighten, the more confident I’ll become in the inflation outlook. Because of the lags in monetary policy, the current high degree of monetary accommodation, and the speed at which the unemployment rate is coming down, it would, to my mind, be imprudent to wait until inflation is much closer to 2 percent to begin to normalize policy. I consider this a strong argument for an initial tightening with inflation still at low levels, and it’s one that I plan to make. But I also recognize and am concerned that, at least in recent years, the empirical relationship between slack and inflation has been quite weak.

Now, I don't want to make too much of this particular episode. Personally, I don't think it had a major impact on the recovery dynamic. But I do think it had an impact; in particular, the pace of improvement in labor market conditions temporarily slowed. It was an unforced error (as I think other members of the Committee sensed as well).

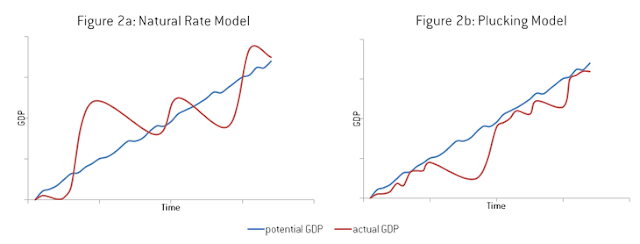

I think the lift-off episode has contributed to a general re-thinking of the Phillips curve and the natural rate hypothesis. The notion of an economy operating at "excess capacity" has always seemed a bit strange to me, let alone the idea that excess capacity as a cause of inflation (as opposed to a force operating on the price-level). Perhaps it is time to re-visit Milton Friedman's "plucking model." Instead of drawing a smooth line through the center of a time-series, Friedman drew a line that defined a ceiling (a capacity constraint). Shocks to the economy manifest themselves as "downward plucks" (as if plucking on an elastic band).

The plucking model is consistent with the observed cyclical asymmetry in unemployment rate fluctuations. And labor market search models are a natural way to model that asymmetry. In case you're interested, I develop a super-simple (and dare I say, elegant) search model here to demonstrate (and test) the idea: Evidence and Theory on the Cyclical Asymmetry in Unemployment Rate Fluctuations, CJE 1997). See also my blog post here as well as some recent work by Ferraro (RED, 2018) and Dupraz, Nakamura and Steinsson (2019). I like where this is going!

One attractive feature of search models, in my view, is that they model relationship formation. Relationships provide a very different mechanism for coordinating economic activity relative to the canonical economic view of anonymous spot exchange in centralized markets. In a relationship, spot prices do not matter as much as the dynamic path of these prices (and other important aspects) over the course of a relationship (see my critique of the sticky price hypothesis here). The observation that retailers, in the early days of C-19, voluntarily rationed goods instead of raising prices makes little sense in anonymous spot exchange, but makes perfect sense for a merchant concerned with maintaining a good relationship with his or her customers. And merchant-supplier relationships can handle shortages without price signals (we're out of toilet paper--please send more!). In financial markets too, the amount of time that is spent forming and maintaining credit relationships is hugely underappreciated in economic modeling. Search theory turns out to be useful for interpreting the way money and bond markets work too. These markets are not like the centralized markets we see modeled in textbooks--they operate as decentralized over-the-counter (OTC) markets, where relationships are key. One reason why economies sometimes take so long to recover after a shock is because the shock has destroyed an existing set of relationships. And it takes time to rebuild relationship capital.

Notions of "overheating" in this context probably do not apply to labor market variables, although there is still the possibility of an overaccumulation of certain types of physical capital in a boom (what the Austrians label "malinvestment"). Any "overheating" is likely to manifest itself primarily in asset prices. And sudden crashes in asset prices (whether driven by fundamentals or not), can have significant consequences on real economic activity if asset valuations are used to support lines of credit.

Finally, we need a good theory of inflation. The NKPC theory of inflation is not, in my view, a completely satisfactory theory in this regard. To begin, it simply assumes that the central bank can target a long-run rate of inflation (implicitly, with the support of a Ricardian fiscal policy, though this is rarely, if ever, mentioned). At best, it is a theory of how inflation can temporarily depart from its long-run target and how interest rate policy can be used to influence transition dynamics. But the really interesting questions, in my view, have to do with monetary and fiscal policy coordination and what this entails for the ability of an "independent" central bank even to determine the long-run rate of inflation (Sargent and Wallace, 1981).

I know what I've described only scratches the surface of this amazingly deep and broad field. Most of you have no doubt lived through your own process of discovery and contemplation in the world of macroeconomic theorizing. Feel free to share your thoughts below.